AI tools like ChatGPT have become incredibly popular since they were released.

As you may know, ChatGPT relies on the Generative Pre-trained Transformer model (GPT).

However, that’s not the only pre-trained model out there.

What Is BERT?

BERT is a deep learning model developed byGoogle AI Researchthat uses unsupervised learning to understand natural language queries better.

This makes it easier for machines to interpret human language as spoken in everyday life.

GPT-3.5 and GPT-4 only consider the left to right context, while BERT caters to both.

However, this unidirectional approach has limitations when it comes to text understanding, causing inaccuracies in generated outputs.

Essentially, this means that BERT analyzes a sentence’s full context before providing an answer.

This allows it to “predict” the context, essentially.

In sentences where one word can have two different meanings, this gives masked language models a distinct advantage.

How Does BERT Work?

BERT’s unique bidirectional context enables the simultaneous processing of text from left to right and vice versa.

The bidirectionality element has positioned BERT as a revolutionary transformer model, driving remarkable improvements in NLP tasks.

BERT’s effectiveness is not only because of its bidirectionality but also because of how it was pre-trained.

Then, during NSP, BERT learns to predict whether sentence X genuinely follows into sentence Y.

Fine-tuning involves supervised learning, leveraging labeled data sets to enhance model performance for specific tasks.

This versatility is yet another reason for BERT’s popularity among NLP enthusiasts.

What Is BERT Commonly Used For?

This is crucial in numerous fields, from monitoring customer satisfaction to predicting stock market trends.

The result is high-quality, coherent summaries that accurately reflect the significant content of the input documents.

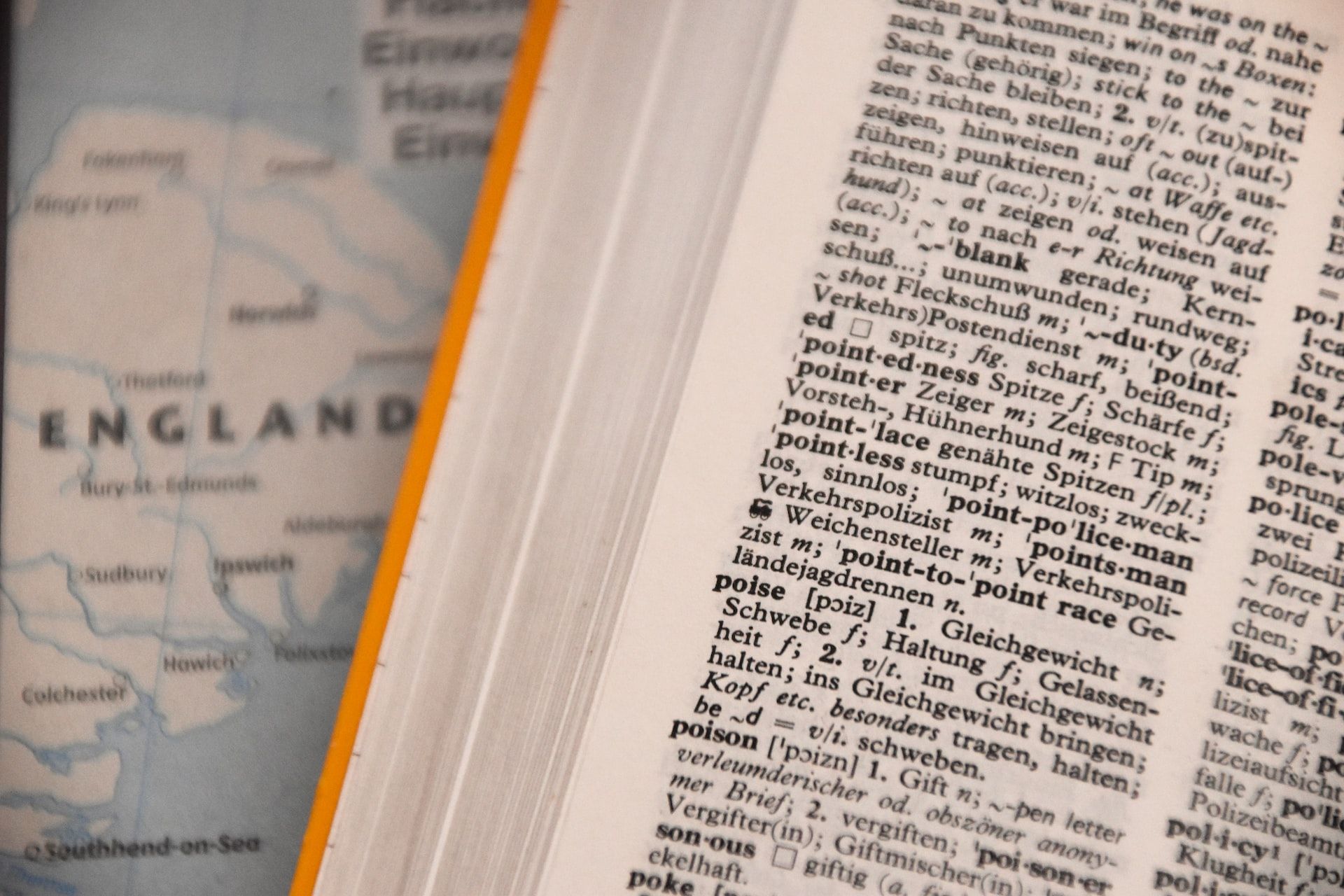

Machine Translation via BERT

Machine translation is an essential NLP task that BERT has improved.

But, more importantly, such tools can be easily integrated into existing workflows.