It’s a deep tech, multi-faceted solution that goes way beyond AI behavioral analytics.

It analyses changes in the behavior of children and notifies parents when a suspicious change is detected.

We’ve been training our algorithm to understand behavioral patterns, every minute, every day.

Parents download the app to the child’s equipment, and pair it to their own equipment.

They can, however, manage the risks.

The rest is about giving parents appropriate advice on how to respond to such events.

Can this solution be utilized in an organisation environment?

We’re working with a global brand that had a situation.

That person was reported and lost his job.

People spend so much time on social networks, they could be placing their company in danger.

However, saying that such a product would be unpopular is an understatement.

How is it different when discussing child protection?

We read a lot about abuse and aggression online.

In the UK, we’re reaching a point where people have had enough.

The internet isnt regulated; people say what they like because they get a feeling of anonymity.

Most reasonable people would say something has to be done.

What can you tell us about apps like the Blue Whale?

Children download these apps because they think it’s cool to take risks.

There are a number of apps that you’re free to get yourself into trouble with.

A software like SafeToNet is vital.

We can advise parents when these trends exist, to keep them aware and alert.

There’s a Peppa Pig video on YouTube where Peppa takes a knife and cuts her own head off.

We can’t know every risk, so we use.

By building communities of collaborative safeguarding, parents can warn each other and keep their children safer.

We do this much quicker than google, because we have no commercial benefit from displaying them.

Is there any regulation around safeguarding children online?

No, it’s mainly a self-imposed regulation.

Certain apps set age limits, but those are easy to bypass.

What children do on those apps and who they interact with could vary greatly from one child to another.

Facebook openly admits it has over 270 million “undesirable users” on their internet.

In their terminology, which is not clearly defined, it means either fake or duplicate accounts.

Sextortion is a huge global issue.

Kids are sending images of themselves to people they have never met face to face.

Our software is designed to identify that using the multi-faceted tools we deploy.

You’ll be surprised to see how many things can be determined just by the speed of typing.

In a more familiar relationship, children typically throw in without much hesitation, not giving it much thought.

However, when interacting with others, they tend to be more cautious and deliberate in selecting their words.

It seems to be ok to be abusive online.

In particular, if I started calling you names, you’re likely to change your behavior pattern.

You might go quiet or raise your voice; those are the kind of changes we look for.

Typically, they become punchy and use fewer words.

What languages does SafeToNet support?

At the moment, SafeToNet is only supported in English.

Although translating the software is possible, teaching it to identify semantics in different languages could be very tricky.

What you might find offensive may not be so for me, because we come from different cultures.

I might swear a lot, you might not.

With our software, parents can allow a certain level of profanity, or filter it out completely.

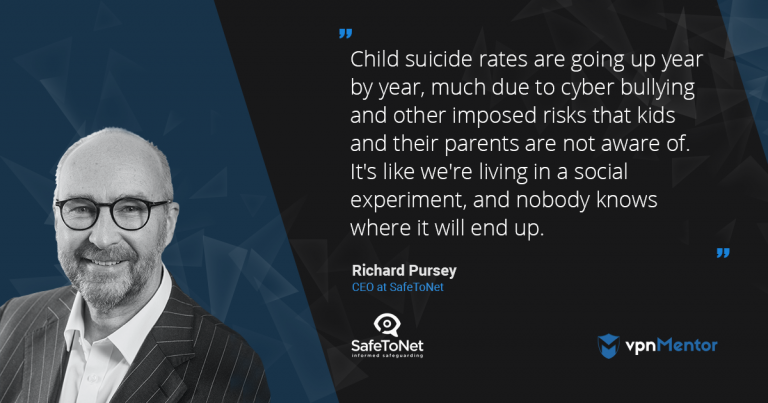

What changes can we expect to see in the near future with regards to child safeguarding online?

Many parents are talking about how the large corporations should act, but they arent doing anything about it.

The way I see it, responsibility will slowly shift towards the parents.

Food packaging has warnings about the health risks, but there are no warnings for mobile devices and apps.

Child data privacy will become a standard part of life in the future.

If not, who knows where we’ll end up?

yo, comment on how to improve this article.