What Is Nvidia Chat with RTX?

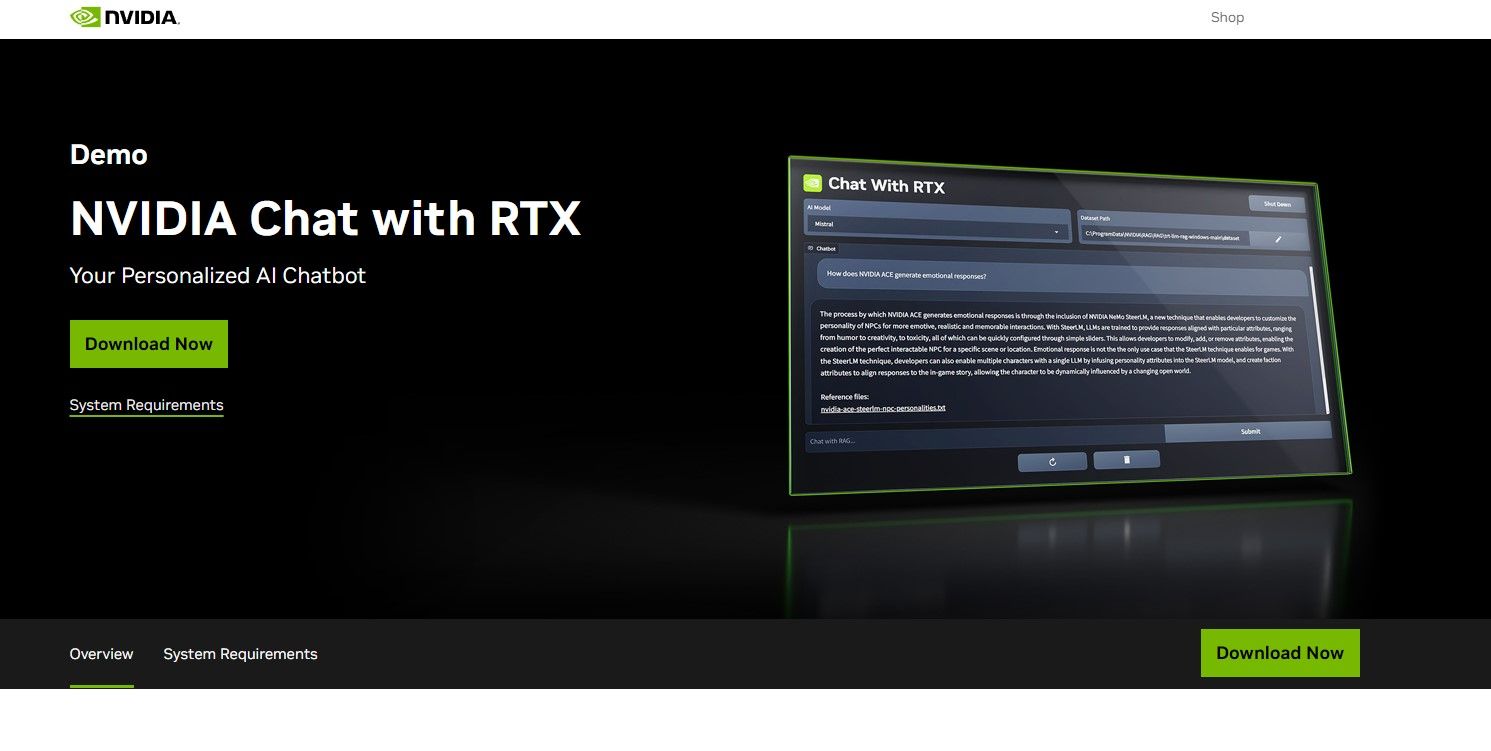

This allows you to customize the chatbot to provide a more personal experience.

However, Chat with RTX does have some minimum specification requirements to install and use properly.

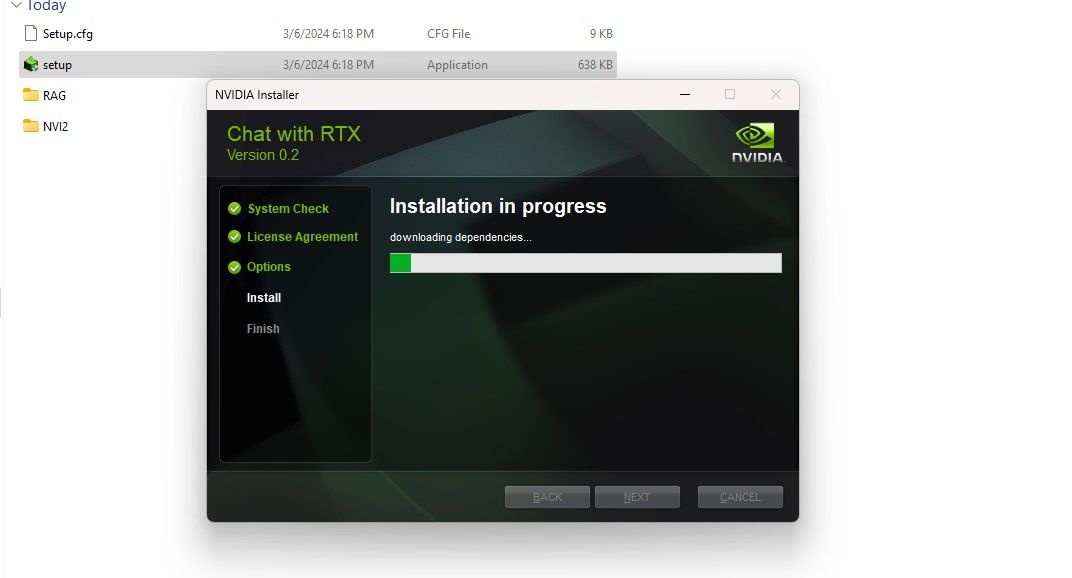

If your PC does pass the minimum system requirement, you could go ahead and roll out the app.

After the installation process, hitClose, and you’re done.

Now, it’s time for you to try out the app.

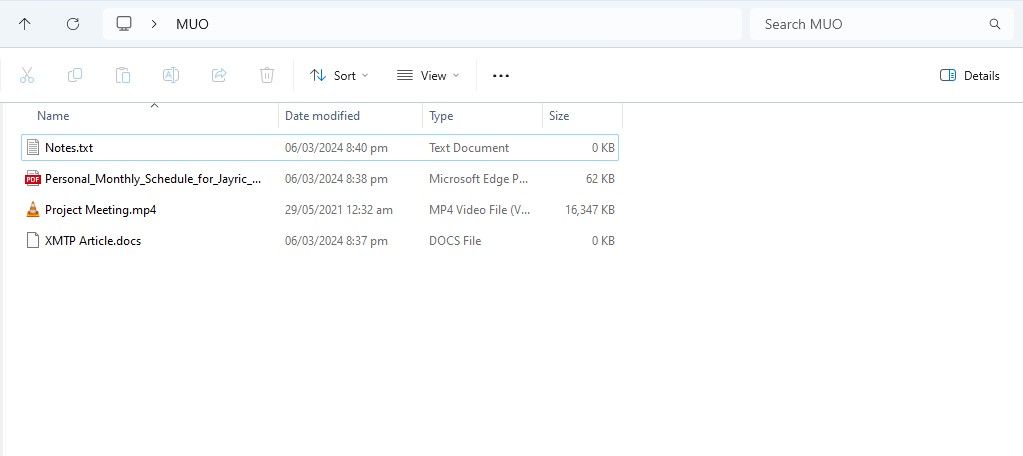

After creation, place your data files into the folder.

Step 2: Set Up Environment

Open Chat with RTX.

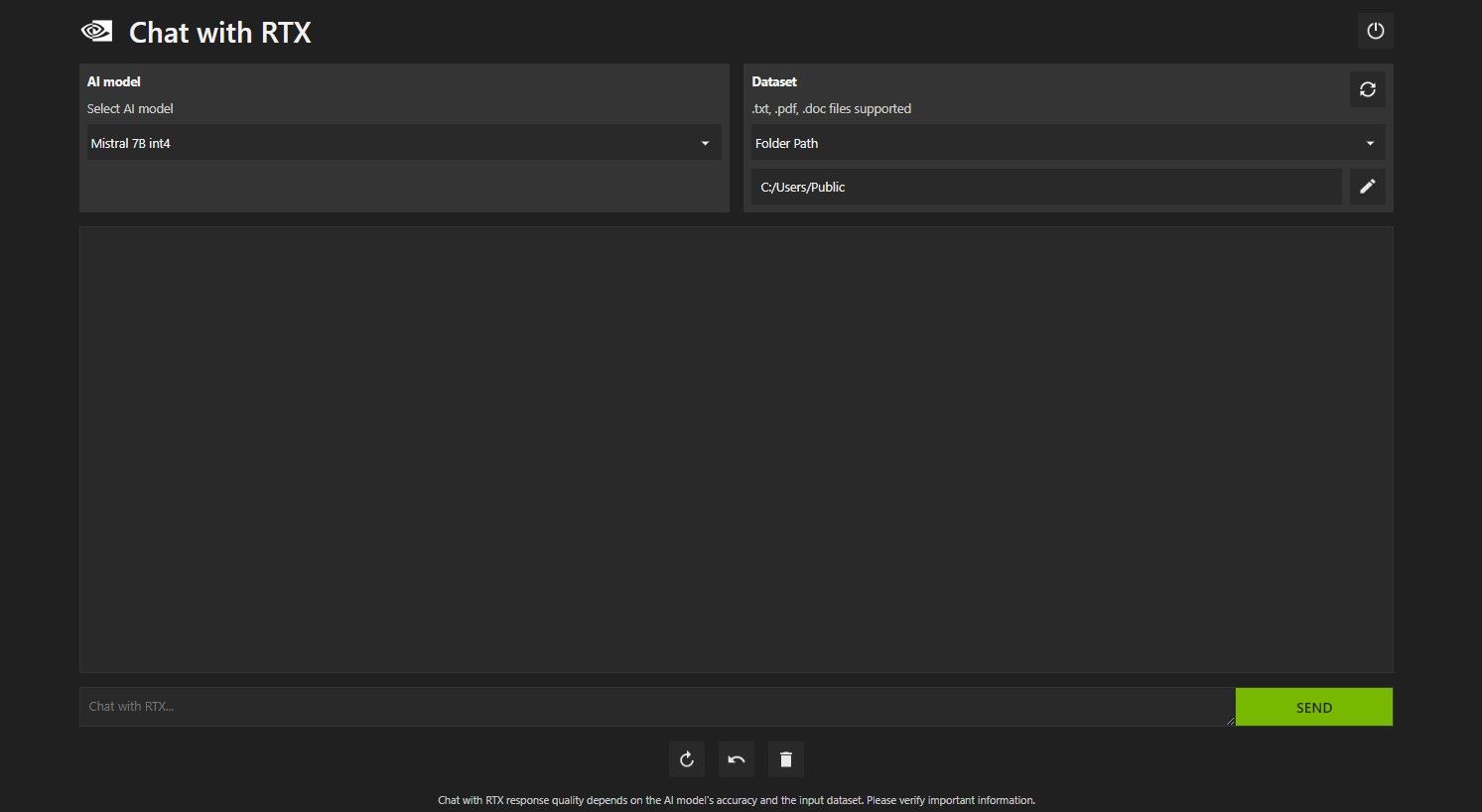

It should look like the image below.

UnderDataset, ensure that theFolder Pathoption is selected.

You are now ready to use Chat with RTX.

Step 3: Ask Chat with RTX Your Questions!

There are several ways to query Chat with RTX.

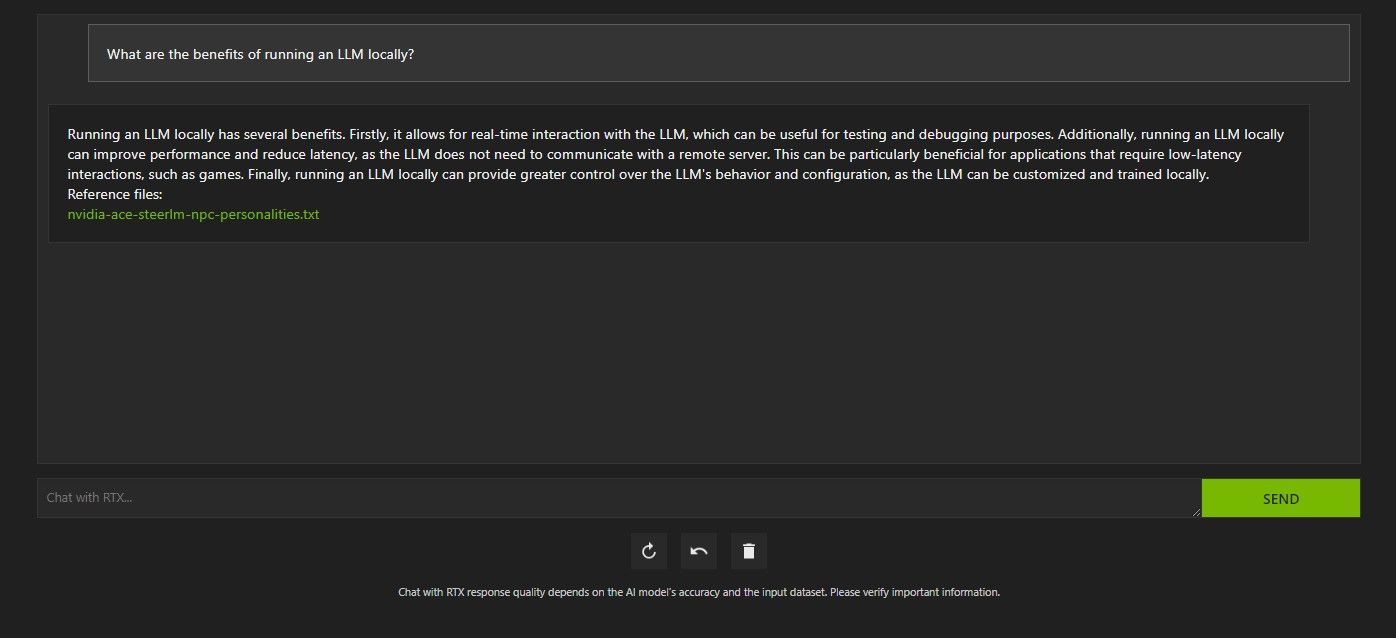

The first one is to use it like a regular AI chatbot.

I asked Chat with RTX about the benefits of using a local LLM and was satisfied with its answer.

It wasn’t enormously in-depth, but accurate enough.

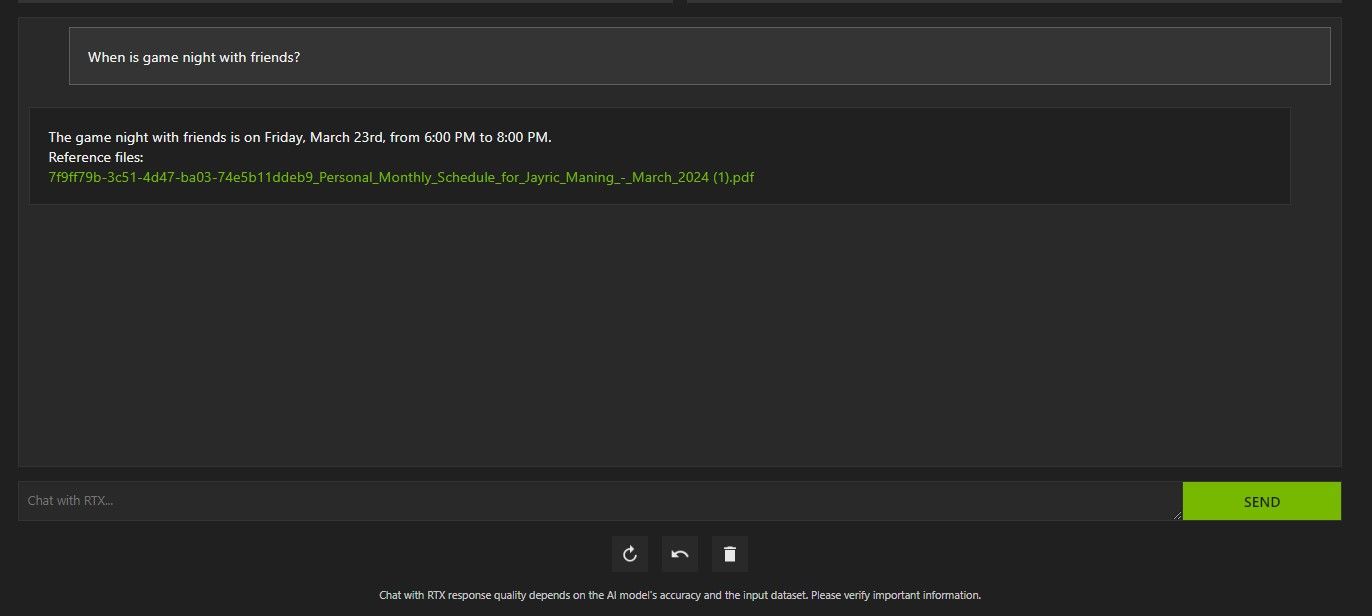

Above, I’ve used Chat with RTX to ask about my schedule.

There are many ways you could use Chat with RTX’s RAG to your advantage.

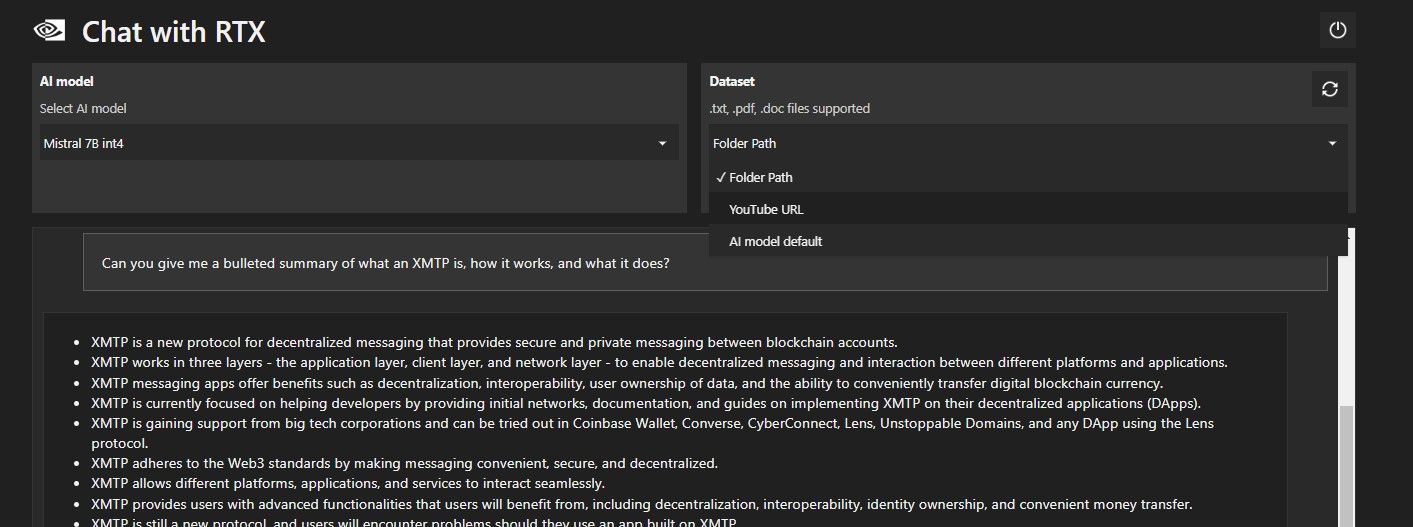

To do so, underDataset, change theFolder PathtoYouTube URL.

Copy the YouTube URL you want to analyze and paste it below the drop-down menu.

Is Nvidia’s Chat with RTX Any Good?

ChatGPT provides RAG functionality.Some local AI chatbots have significantly lower system requirements.

So, is Nvidia Chat with RTX even worth using?

The answer is yes!

Chat with RTX is worth using despite the competition.

As for other locally running AI chatbots running Mistral 7B, Chat with RTX performs better and faster.

It is worth noting that the Chat with RTX version we are currently using is a demo.

Later releases of Chat with RTX will likely become more optimized and deliver performance boosts.

What if I Don’t Have an RTX 30 or 40 Series GPU?

Two of the most popular ones would be GPT4ALL and Text Gen WebUI.

Try GPT4ALL if you want a plug-and-play experience locally running an LLM.