Machine learning is a great way to create artificial intelligence that is powerful and adapts to its training data.

But sometimes, that data can cause issues.

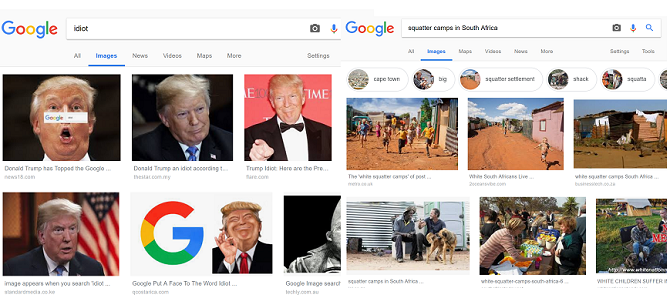

Google Image Search Result Mishaps

Google Search has made navigating the web a whole lot easier.

The engine’s algorithm takes a variety of things into consideration when churning up results.

But the algorithm also learns from user traffic, which can cause problems for search result quality.

Nowhere is this more apparent than in image results.

This is despite statistics showing that the overwhelming majority of those living in informal housing are black South Africans.

The factors used in Google’s algorithm also means that internet users can manipulate results.

One of the most high-profile incidents of chatbots gone awry was Microsoft’s attempt to launch its chatbot Tay.

Tay mimicked the language patterns of a teenage girl and learned through her interactions with other Twitter users.

Not long after, Microsoft took Tay offline for good.

But this AI has a problematic history when attempting to recognize people of color.

In 2015, users discovered that Google Photos was categorizing some black people as gorillas.

Another incident involved Apple’s Face ID software incorrectly identifying two different Chinese women as the same person.

As a result, the iPhone X owner’s colleague could unlock the phone.

Software like FaceApp allows you to face-swap subjects from one video into another.

The power of fake images has been accelerated by image generators powered by AI.

Employees who wished to remain anonymous came forward to tell Reuters about their work on the project.

Developers wanted the AI to identify the best candidates for a job based on their CVs.

However, people involved in the project soon noticed that the AI penalized female candidates.

As a result, the AI began filtering out CVs based on the keyword “women”.

The keyword appeared in the CV under activities such as “women’s chess club captain”.

While developers altered the AI to prevent this penalization of women’s CVs, Amazon ultimately scrapped the project.

In 2023, a Forcepoint security researcher Aaron Mulgrew was able to create zero-day malware using ChatGPT prompts.

These crashes included serious injuries and six fatalities.